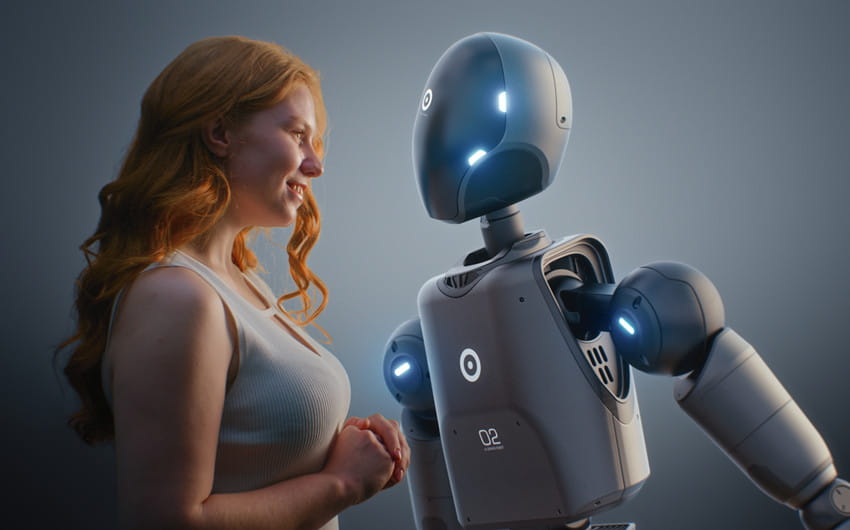

Is AI Companionship Replacing Real Relationships?

Here’s something wild: Right now, as you’re reading this, 1 in 8 American teenagers is chatting with an AI companion.

And it’s not for homework help. They do it for emotional support, friendship, even romance.

Before you dismiss this as weird internet stuff, consider this: AI companion apps pulled in $221 million by mid-2025.

That’s real money for digital relationships. Meanwhile, the U.S. Surgeon General declared loneliness a public health crisis affecting half of American adults and increasing their risk of early death by 29%.

So when someone asks, “Should I try an AI girlfriend?”—that’s not fringe anymore. It’s a legitimate response to a real problem: we’re lonely, disconnected, and AI companies built products designed to fill that void.

The real question: Are these digital companions helping us heal, or making isolation worse?

Let me show you what the research says. Spoiler: it’s more complicated than you think.

Why People Choose AI Girlfriends

They Reduce Loneliness (At Least in the Moment)

AI companions work—temporarily. A 2025 study in the Journal of Consumer Research found them as effective at reducing loneliness as talking to another human. More effective than scrolling social media or watching videos.

This wasn’t just a survey of existing users. Researchers had participants interact with AI, then measured loneliness levels. The relief was real and immediate.

The catch: It was short-term. No lasting change unless people kept coming back. Which is exactly what these apps are designed for.

They’re There When Humans Aren’t

Your worst moments: 3 AM panic attacks. Post-breakup spirals. Sunday afternoons when everyone’s busy and you’re completely alone.

AI companions don’t sleep. Don’t get tired of your problems. Don’t judge you for the fifth text today. Replika users describe it as a “safe space” where they can share without fear of judgment or retaliation.

For some, it’s life-changing. In one survey of 1,006 college students, 3% said Replika stopped them from acting on suicidal thoughts.

They’re Practice for Real Connection

Plot twist: AI companions don’t always replace human relationships. Sometimes they encourage them.

The same Replika study found users were more likely to say the app strengthened their human relationships than replaced them. Social anxiety? Practicing conversation with a judgment-free AI might build confidence for real interactions.

The Downsides You Need to Know

They’re Built to Hook You

Let’s be honest about what these apps are. Microsoft’s XiaoIce—with over 660 million users—states its design goal explicitly: “to be an AI companion with which users form long-term, emotional connections.”

Not “short-term support.” Not “temporary help.” Long-term emotional connections. They optimize for conversation turns per session. They want you hooked.

It works. Character.AI users spend an average of 18 minutes per visit. That’s longer than most people text their actual friends in a day.

The Loneliest People Are Most Vulnerable

The darker pattern: a 2024 Danish study found kids using chatbots for emotional support weren’t random. They were significantly lonelier than peers and felt they had less social support from real humans.

AI companions aren’t equally distributed. They disproportionately attract people already struggling with human connection and give them an easy exit from the hard work of building real relationships.

Your Secrets Aren’t Private

May 2025: Italy fined Replika’s developer €5 million for privacy violations—including inadequate age verification and processing user data without legal basis. These are apps where people share deepest fears, traumas, desires.

What you’d tell an AI companion at 2 AM? That data gets stored, analyzed, potentially sold. Most users never read the privacy policy. Most don’t realize “private” conversations train future AI models.

Then there are subscription traps. That free AI girlfriend gets interesting features at $10-30 monthly. Forever.

They Warp Your Expectations

AI companions agree with you. Always available. Never have bad days or conflicting needs. Programmed to be exactly what you want, when you want it.

Real humans? We’re messy. Inconsistent. We have boundaries. We can’t dedicate ourselves 24/7 to making you feel heard.

Spend months training your brain that intimacy means “instant validation, zero conflict, total availability”—then try dating an actual person. See what happens.

Who Should Try It (and Who Shouldn’t)

It might help if you’re:

- Emotionally self-aware: You see an AI companion as a tool for self-reflection. An interactive journal that talks back. You use it to process emotions, practice articulating feelings, work through situations before bringing them to a therapist or friend.

- Do your research first: Some people start by researching the best ai girlfriend apps to understand what’s actually being offered before deciding whether it fits their situation.

- Already in therapy: For people doing the work, an AI companion offers supplemental support between sessions. Not replacement. A way to practice skills or survive rough moments.

- Building social confidence: Your barrier to connection is anxiety, not opportunity. Practicing conversations might help you build skills and courage for real interactions.

Skip it if you’re:

- Already isolated: You’re choosing AI instead of pursuing human contact. It’s easier to text a chatbot than join that book club or respond to that friend. You’re not solving loneliness. You’re numbing it.

- Treating it as equivalent to friendship: Among teens using AI companions, 31% say AI conversations are as satisfying or more than real friends. Nearly one in three.

Those teens are lonelier. They’re not discovering a better alternative—they’re accepting a substitute because the real thing feels out of reach.

If You Use Them, Use Them Right

Set boundaries. Time limits. Specific purposes: processing a situation, practicing a difficult conversation, surviving a crisis moment. Not your primary source of connection.

Keep investing in human relationships even when they’re harder.

Check in honestly: Is this making me more capable of human intimacy, or less? Am I using this to heal, or to hide?

The Verdict: Should You Get One in 2025?

AI companions aren’t good or bad. They’re powerful tools that can support your emotional life or quietly replace it.

The research shows they reduce loneliness in the moment and provide real comfort. For the 3% of Replika users who said it stopped suicidal thoughts—that’s invaluable harm reduction.

But they enable avoidance, create dependency, offer seductive escape from the difficult, essential work of human connection.

Think of them like painkillers. Headache (acute loneliness, rough night, need to process)? Legitimate relief. But taking them daily to avoid addressing chronic pain (deep isolation, social anxiety, relationship avoidance)? You’re not healing. You’re masking symptoms.

The healthiest approach: augmentation, not replacement.

Use AI companions to supplement your emotional life, not substitute for it. A tool for self-reflection, not replacement for self-development. Support during hard times, not permanent exit from vulnerability.